Date: August 1, 2024

I configured a Kubernetes cluster with kubeadm using 3 Raspberry pis.

![]()

Raspberry PI - Kuberentes home cluster

Kubernetes is a complex distributed system, comprising numerous components that work together to manage containerized applications at scale. I decided to explore the inner workings of Kubernetes and various components by setting up the Raspberry Pi cluster in home network.

Table of Contents

- Motivation

- Raspberry Pi Setup

- Kubernetes

- Metrics server

- Nginx Ingress Controller

- Metallb

- Let's Encrypt Certificate

- Docker Registry

- Argo CD

- Reference

Motivation

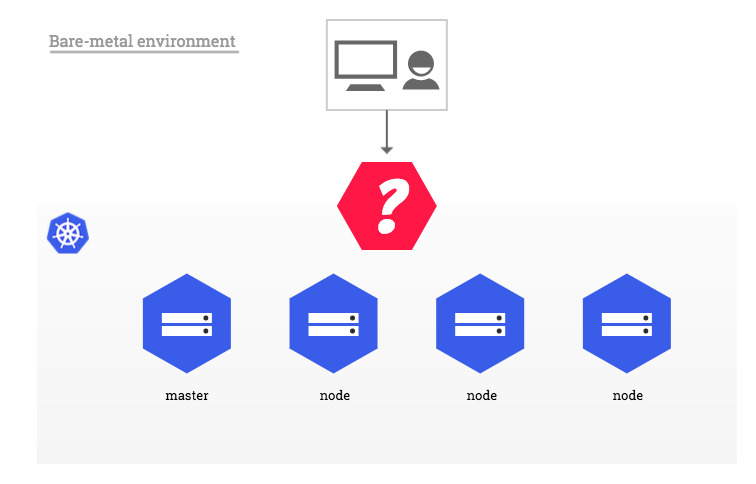

Why On-Premise Kubernetes?

I previously built a Kubernetes Cluster on EKS. Due to cloud maintenance costs, however, I wanted to have a private on-premise setup for complete control over network, hardware, and security. It would be a good testing bed of application building for Kubernetes environment.

I initially tried a 3-master node MicroK8s cluster on VirtualBox and a single-node setup using Minikube. But I encountered network instability in Virtualbox and lack of scalability in minikube.

So I decided to build a Raspberry Pi cluster with 1 master and 2 worker nodes for better stability and scalability, while allowing me to manually configure the control plane for a deeper understanding of Kubernetes management.

Raspberry Pi Setup ¶

Kubernetes consists of a control plane (master node) and worker nodes. For high availability, an odd number of master nodes (1, 3, 5, etc.) and more than 2 worker nodes are ideal. I opted for a minimal, scalable setup with 1 master and 2 worker nodes. To ensure durability and better performance, I used SSDs instead of microSD cards. NVMe SSDs require an M.2 HAT+ to connect them to the Raspberry Pi and OS install.

Without fans

- Hardware

- 3 x Raspberry pi 5 (1 master, 2 workers)

- 8GB Memory, 4-core CPU

- 3 x Raspberry Pi M.2 HAT+ (1 master, 2 workers)

- 3 x Raspberry Pi Active Cooler

- 3 x SSD: SK Hynix BC901 256GB

- 3 x Ethernet cable

- 3 x Usb-C cable (power adapter ideally >= 25W = 5A x 5V)

- 1 x TP LINK Switch Hub TL-SG105

- 3 x Raspberry pi 5 (1 master, 2 workers)

I referred to a LINK to configure NVMD SSD Boot.

Installing fans reduces the SSD and CPU temperature drastically

Install OS

I installed Ubuntu Server 24.04 LTS. The installation process is as follows:

- Download and Run raspberrypi/rpi-imager.

- Use NVME SSD Adapter to connect your SSD into your PC.

- Use rpi-imager to flash the SSD with Ubuntu server 24.04 LTS image.

- Once done, extract the SSD from the SSD adapter, equip it into the Raspbbery PI and boot with account you setup during the flashing process. (Default id/pw: ubuntu/ubuntu)

Enable the external PCI Express port

Now that we are logged into the Raspberry Pi 5 server, use command line interface to update some configurations. If you mounted the m.2 NVME SSD into the PI using Raspberry PI's official NVME HAT, the external PCIE port is enabled by default. Otherwise, do the following:

sudo vim /boot/firmware/config.txt

# Add to bottom of /boot/firmware/config.txt

dtparam=pciex1

# Note: You could also just add the following (it is an alias to the above line)

# dtparam=nvme

# Optionally, you can control the PCIe lane speed using this parameter

# dtparam=pciex1_gen=3

Set NVMe early in the boot order

# Edit the EEPROM on the Raspberry Pi 5.

sudo EDITOR=vim rpi-eeprom-config --edit

# Change the BOOT_ORDER line to the following:

BOOT_ORDER=0xf416

# Add the following line if using a non-HAT+ adapter:

PCIE_PROBE=1

# Press Ctrl-O, then enter, to write the change to the file.

# Press Ctrl-X to exit nano (the editor).

Network settings

Check linux set-up for initial OS setups.

- Time sync

- Change Hostname

- SSH access

Kubernetes ¶

We will use v1.30.3 Kubernetes.

- Prerequisite

- Container runtime

- Installing kubeadm, kubelet and kubectl

- Creating a cluster with kubeadm

Prerequisite

- Swap memory off

- "The default behavior of a kubelet was to fail to start if swap memory was detected on a node."

- You MUST disable swap if the kubelet is not properly configured to use swap.

# disable swapping temporarily.

sudo swapoff -a

# To make this change persistent across reboots, make sure swap is disabled in config files. Comment out swap

# Disable swap permanently

# vim /etc/fstab

sudo sed -i '/ swap / s/^\(.*\)$/#\1/g' /etc/fstab

# check Swap is disabled

free -h

total used free shared buff/cache available

Mem: 7.8Gi 900Mi 2.1Gi 5.2Mi 5.0Gi 6.9Gi

-> Swap: 0B 0B 0B

- Required ports

- Check required ports for both control plane and worker nodes

- https://kubernetes.io/docs/reference/networking/ports-and-protocols/

nc 127.0.0.1 6443 -v

- Configuring a cgroup driver

- https://kubernetes.io/docs/tasks/administer-cluster/kubeadm/configure-cgroup-driver/

# On Linux, control groups are used to constrain resources that are allocated to processes.

# Both the `KUBELET` and the underlying `CONTAINER RUNTIME` need to interface with control groups

# to enforce resource management for pods and containers and set resources such as cpu/memory requests and limits.

# There are two cgroup drivers available: cgroupfs-default cgroup driver in the kubelet, systemd.

# The Container runtimes page explains that the systemd driver is recommended for kubeadm based setups

# instead of the kubelet's default cgroupfs driver, because kubeadm manages the kubelet as a systemd service.

# cat << EOF > kubeadm-config.yaml

# kind: ClusterConfiguration

# apiVersion: kubeadm.k8s.io/v1beta3

# kubernetesVersion: v1.30.3

# ---

# kind: KubeletConfiguration

# apiVersion: kubelet.config.k8s.io/v1beta1

# cgroupDriver: systemd

# EOF

Container runtime

Kubernetes 1.30 requires that you use a runtime that conforms with the Container Runtime Interface (CRI); containerd, CRI-O, Docker Engine. You need to install a container runtime into each node in the cluster so that Pods can run there. (kubelet deprecated Docker support since Kubernetes>=1.24)

CRI-O

containerd

-

Getting started with containerd

-

Install and configure prerequisites

-

- Enable IPv4 packet forwarding

-

- cgroup drivers: systemd

-

- container runtime - containerd

# 1. Enable IPv4 packet forwarding

# By default, the Linux kernel does not allow IPv4 packets to be routed between interfaces.

# sysctl params required by setup, params persist across reboots

cat << EOF | sudo tee /etc/sysctl.d/k8s.conf

net.ipv4.ip_forward = 1

EOF

# Apply sysctl params without reboot

sudo sysctl --system

# Verify that net.ipv4.ip_forward is set to 1 with:

sysctl net.ipv4.ip_forward

# 2. cgroup drivers

# On Linux, control groups are used to constrain resources that are allocated to processes.

# 3. container runtime - containerd

# https://github.com/containerd/containerd/blob/main/docs/getting-started.md

# Step 3-1: Installing containerd

wget https://github.com/containerd/containerd/releases/download/v1.7.20/containerd-1.7.20-linux-arm64.tar.gz

sudo tar Cxzvf /usr/local containerd-1.7.20-linux-arm64.tar.gz

# If you intend to start containerd via systemd, you should also download

# the containerd.service unit file into /usr/local/lib/systemd/system/containerd.service

sudo mkdir -p /usr/local/lib/systemd/system/

sudo wget -O /usr/local/lib/systemd/system/containerd.service https://raw.githubusercontent.com/containerd/containerd/main/containerd.service

sudo systemctl daemon-reload

sudo systemctl enable --now containerd

sudo systemctl status containerd

# Step 3-2: Installing runc

wget https://github.com/opencontainers/runc/releases/download/v1.1.13/runc.arm64

sudo install -m 755 runc.arm64 /usr/local/sbin/runc

# Step 3-3: Installing CNI plugins

wget https://github.com/containernetworking/plugins/releases/download/v1.5.1/cni-plugins-linux-arm64-v1.5.1.tgz

sudo mkdir -p /opt/cni/bin

sudo tar Cxzvf /opt/cni/bin cni-plugins-linux-arm64-v1.5.1.tgz

# 4. You can find this file under the path /etc/containerd/config.toml.

# generate default config

sudo mkdir -p /etc/containerd

sudo su -

sudo containerd config default > /etc/containerd/config.toml

sudo cat /etc/containerd/config.toml

# 5. Configuring the systemd cgroup driver

# To use the systemd cgroup driver in /etc/containerd/config.toml with runc, set

sudo sed -i 's/SystemdCgroup \= false/SystemdCgroup = true/g' /etc/containerd/config.toml

cat /etc/containerd/config.toml

[plugins]

# ...

[plugins."io.containerd.grpc.v1.cri"]

[plugins."io.containerd.grpc.v1.cri".containerd.runtimes.runc]

[plugins."io.containerd.grpc.v1.cri".containerd.runtimes.runc.options]

SystemdCgroup = true

sudo systemctl restart containerd

sudo systemctl status containerd

nerdctl

"nerdctl is a Docker-compatible CLI for containerd"

- Pre-requisite

- containerd is installed, enabled, and running

- cni plugins is installed (previously

cni-plugins-linux-arm64-v1.5.1.tgz) - Configure rootlesskit

- Install Guide

# uidmap

sudo apt-get install uidmap -y

# RootlessKit

sudo apt-get install rootlesskit -y

wget https://github.com/containerd/nerdctl/releases/download/v2.0.0-rc.0/nerdctl-2.0.0-rc.0-linux-arm64.tar.gz

sudo tar Cxzvvf /usr/local/bin nerdctl-2.0.0-rc.0-linux-arm64.tar.gz

which nerdctl

/usr/local/bin/nerdctl

#sudo sh -c "echo 1 > /proc/sys/kernel/unprivileged_userns_clone"

sudo vim /etc/sysctl.d/99-rootless.conf

kernel.unprivileged_userns_clone=1

# apply in 99-rootless.conf to system

sudo sysctl --system

# check if it is applied to system

sudo sysctl kernel.unprivileged_userns_clone

# DO NOT RUN AS `root` !!!

containerd-rootless-setuptool.sh install

# Now, you can run without root privilege!

nerdctl version

nerdctl

Installing kubeadm, kubelet and kubectl

- For Kubernetes v1.30, you will install these packages on ALL OF YOUR MACHINES:

kubeadm: the command to bootstrap the cluster.kubelet: the component that runs on all of the machines in your cluster and does things like starting pods and containers.kubectl

# 1. Update the apt package index and install packages needed to use the Kubernetes apt repository:

sudo apt-get update

# apt-transport-https may be a dummy package; if so, you can skip that package

sudo apt-get install -y apt-transport-https ca-certificates curl gpg

# 2. Download the public signing key for the Kubernetes package repositories. The same signing key is used for all repositories so you can disregard the version in the URL:

# If the directory `/etc/apt/keyrings` does not exist, it should be created before the curl command, read the note below.

# sudo mkdir -p -m 755 /etc/apt/keyrings

curl -fsSL https://pkgs.k8s.io/core:/stable:/v1.30/deb/Release.key | sudo gpg --dearmor -o /etc/apt/keyrings/kubernetes-apt-keyring.gpg

# 3. Add the appropriate Kubernetes apt repository.

# This overwrites any existing configuration in /etc/apt/sources.list.d/kubernetes.list

echo 'deb [signed-by=/etc/apt/keyrings/kubernetes-apt-keyring.gpg] https://pkgs.k8s.io/core:/stable:/v1.30/deb/ /' | sudo tee /etc/apt/sources.list.d/kubernetes.list

# 4. Update the apt package index, install kubelet, kubeadm and kubectl, and pin their version:

sudo apt-get update

sudo apt-get install -y kubelet kubeadm kubectl

sudo apt-mark hold kubelet kubeadm kubectl

# kublet : set node ip

sudo su -

local_ip="$(ip --json addr show eth0 | jq -r '.[0].addr_info[] | select(.family == "inet") | .local')"

echo $local_ip

cat > /etc/default/kubelet << EOF

KUBELET_EXTRA_ARGS=--node-ip=$local_ip

EOF

cat /etc/default/kubelet

exit

# 5. (Optional) Enable the kubelet service before running kubeadm:

sudo systemctl enable --now kubelet

Creating a cluster with kubeadm

- Initializing your

control-plane node(master node)

# --pod-network-cidr

# your Pod network must not overlap with any of the host networks (192.168.0.0/24)

# on master node:

POD_CIDR="10.100.0.0/16"

NODENAME=$(hostname -s)

MASTER_PRIVATE_IP=$(ip addr show eth0 | awk '/inet / {print $2}' | cut -d/ -f1)

sudo kubeadm init \

--apiserver-advertise-address="$MASTER_PRIVATE_IP" \

--apiserver-cert-extra-sans="$MASTER_PRIVATE_IP" \

--pod-network-cidr="$POD_CIDR" \

--controller-manager-extra-args "allocate-node-cidrs=false" \

--node-name "$NODENAME" \

-v=5

# NOTE: --controller-manager-extra-args "allocate-node-cidrs=false"

# prevent `kube-controller-manager-master` from trying to allocate podCidr.

# Calico will do it instead :)

# [addons] Applied essential addon: CoreDNS

# [addons] Applied essential addon: kube-proxy

# Your Kubernetes control-plane has initialized successfully!

# To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

# Alternatively, if you are the root user, you can run:

# export KUBECONFIG=/etc/kubernetes/admin.conf

# You should now deploy a pod network to the cluster.

# Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

# https://kubernetes.io/docs/concepts/cluster-administration/addons/

# Then you can join any number of worker nodes by running the following on each as root:

sudo kubeadm join 192.168.0.10:6443 --token mjgw9s.d29kh7dtjqig5owf \

--discovery-token-ca-cert-hash sha256:f3516dba4e89b3530a044ed531ab07ed00fea6a44104aae1d0ec1ab87357e14a

- Worker nodes

# set `node-role`

kubectl label node worker1 node-role.kubernetes.io/worker=worker

kubectl label node worker2 node-role.kubernetes.io/worker=worker

kubectl get no -o wide

NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME

master NotReady control-plane 58s v1.30.3 192.168.0.10 <none> Ubuntu 24.04 LTS 6.8.0-1008-raspi containerd://1.7.20

worker1 NotReady worker 19s v1.30.3 192.168.0.11 <none> Ubuntu 24.04 LTS 6.8.0-1008-raspi containerd://1.7.20

worker2 NotReady worker 25s v1.30.3 192.168.0.12 <none> Ubuntu 24.04 LTS 6.8.0-1008-raspi containerd://1.7.20

Troubleshooting kubeadm

corednsis stuck in the Pending state. This is expected and part of the design.

k get pod -n kube-system

NAME READY STATUS RESTARTS AGE

coredns-7db6d8ff4d-sv6bv 0/1 Pending 0 4h54m

coredns-7db6d8ff4d-vtc4p 0/1 Pending 0 4h54m

k describe pod coredns-7db6d8ff4d-sv6bv -n kube-system

# Warning FailedScheduling 7m50s (x6 over 4h45m) default-scheduler 0/3 nodes are available: 3 node(s) had untolerated taint {node.kubernetes.io/not-ready: }. preemption: 0/3 nodes are available: 3 Preemption is not helpful for scheduling.

- CIDR allocation failed

k logs kube-controller-manager-master -nkube-system

E0807 07:33:10.824783 1 controller_utils.go:262]

Error while processing Node Add/Delete: failed to allocate cidr from cluster cidr at idx:0:

CIDR allocation failed; there are no remaining CIDRs left to allocate in the accepted range

kubectl describe pod kube-controller-manager-master -n kube-system | grep cluster-cidr

kubectl describe pod kube-controller-manager-master -n kube-system | grep allocate-node-cidrs

# The --cluster-cidr=10.100.0.0/16 should match the pod network CIDR

# and is used by the controller manager to allocate pod CIDRs to nodes.

# So this seems to be a correct set-up of cidr

sudo cat /etc/kubernetes/manifests/kube-controller-manager.yaml | grep cidr

- --allocate-node-cidrs=true

- --cluster-cidr=10.100.0.0/16

sudo vim /etc/kubernetes/manifests/kube-controller-manager.yaml

- --allocate-node-cidrs=false

# Error message is resolved!

kubectl describe nodes

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal CIDRNotAvailable 7m53s (x17 over 14h) cidrAllocator Node worker1 status is now: CIDRNotAvailable

-> Normal RegisteredNode 4m1s node-controller Node worker1 event: Registered Node worker1 in Controller

# https://devops.stackexchange.com/questions/17032/what-is-the-meaning-of-the-podcidr-field-in-the-node-spec-in-kubenretes

# https://github.com/kubernetes/kubernetes/issues/76761

# When using Calico, the podCIDR field on the node objects is not used or required.

# Calico handles IP allocation without needing this field to be set.

kubectl get nodes -o custom-columns=NAME:.metadata.name,CIDR:.spec.podCIDR

NAME CIDR

master <none>

worker1 <none>

worker2 <none>

# Instead of using podCIDR, Calico uses its own IPPool resource to manage the available IP ranges. You can view this with:

kubectl get ippool -o yaml

kubectl get blockaffinities -A

NAME AGE

master-10-100-219-64-26 20h

worker1-10-100-235-128-26 20h

worker2-10-100-189-64-26 20h

kubectl get ipamblocks -A

NAME AGE

10-100-189-64-26 20h

10-100-219-64-26 20h

10-100-235-128-26 20h

k get pod -A -o wide | grep -v 192.168. | grep -v Completed | grep worker2

10.100.189.xx

k get pod -A -o wide | grep -v 192.168. | grep -v Completed | grep master

10.100.219.xx

k get pod -A -o wide | grep -v 192.168. | grep -v Completed | grep worker1

10.100.235.xx

kubectl get ippool default-ipv4-ippool -o yaml

apiVersion: crd.projectcalico.org/v1

kind: IPPool

metadata:

annotations:

projectcalico.org/metadata: '{"creationTimestamp":"2024-08-07T03:17:23Z"}'

creationTimestamp: "2024-08-07T03:17:23Z"

generation: 1

name: default-ipv4-ippool

resourceVersion: "935"

uid: 04c9c442-e0a4-4a42-b481-2609ccde7bda

spec:

allowedUses:

- Workload

- Tunnel

blockSize: 26

cidr: 10.100.0.0/16

ipipMode: Always

➜ ~ k get pod -A -o wide

NAMESPACE NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

default be-go-6b6f5fc88d-mxxbg 1/1 Running 0 13m 10.100.235.131 worker1 <none> <none>

default be-py-6bc85fcb56-cn8cc 1/1 Running 0 13m 10.100.235.132 worker1 <none> <none>

default be-py-6bc85fcb56-lb4rk 1/1 Running 0 13m 10.100.189.68 worker2 <none> <none>

default fe-nginx-d7f6d6449-rzmll 1/1 Running 0 13m 10.100.189.67 worker2 <none> <none>

ingress-nginx ingress-nginx-admission-create-g4qqd 0/1 Completed 0 14m 10.100.235.129 worker1 <none> <none>

ingress-nginx ingress-nginx-admission-patch-znfw5 0/1 Completed 0 14m 10.100.189.66 worker2 <none> <none>

ingress-nginx ingress-nginx-controller-666487-z5td9 1/1 Running 0 14m 10.100.235.130 worker1 <none> <none>

kube-system calico-kube-controllers-77d59654f4-ctfst 1/1 Running 0 17m 10.100.219.66 master <none> <none>

kube-system calico-node-d9nj8 1/1 Running 0 17m 192.168.0.10 master <none> <none>

kube-system calico-node-mslzt 1/1 Running 0 17m 192.168.0.12 worker2 <none> <none>

kube-system calico-node-vzlk5 1/1 Running 0 17m 192.168.0.11 worker1 <none> <none>

kube-system coredns-7db6d8ff4d-pm4v9 1/1 Running 0 19m 10.100.219.65 master <none> <none>

kube-system coredns-7db6d8ff4d-zz9h5 1/1 Running 0 19m 10.100.219.67 master <none> <none>

kube-system etcd-master 1/1 Running 0 19m 192.168.0.10 master <none> <none>

kube-system kube-apiserver-master 1/1 Running 0 19m 192.168.0.10 master <none> <none>

kube-system kube-controller-manager-master 1/1 Running 0 19m 192.168.0.10 master <none> <none>

kube-system kube-proxy-6rlhv 1/1 Running 0 19m 192.168.0.12 worker2 <none> <none>

kube-system kube-proxy-6zrf8 1/1 Running 0 19m 192.168.0.10 master <none> <none>

kube-system kube-proxy-mz96w 1/1 Running 0 19m 192.168.0.11 worker1 <none> <none>

kube-system kube-scheduler-master 1/1 Running 0 19m 192.168.0.10 master <none> <none>

kube-system metrics-server-5df54c66b8-pzj84 1/1 Running 0 15m 10.100.189.65 worker2 <none> <none>

metallb-system controller-6dd967fdc7-2c8fg 1/1 Running 0 11m 10.100.189.69 worker2 <none> <none>

metallb-system speaker-4bm8l 1/1 Running 0 11m 192.168.0.10 master <none> <none>

metallb-system speaker-9kmcf 1/1 Running 0 11m 192.168.0.12 worker2 <none> <none>

metallb-system speaker-qnw8v 1/1 Running 0 11m 192.168.0.11 worker1 <none> <none>

ps aux | grep cluster-cidr

--cluster-cidr=10.100.0.0/16

helm install --dry-run cat-release ./cat-chart -f ./cat-chart/values.pi.yaml

# change CIDR blocks

k delete --grace-period=0 --force -nkube-system pod/coredns-7db6d8ff4d-5dv6d

CNI: Calico

Install Calico CNI (Container Network Interface) plugin to enable Pod networking!

You must deploy a Container Network Interface (CNI) based Pod network add-on such as Calico, flannel, weavenet so that your Pods can communicate with each other. Cluster DNS (CoreDNS) will not start up before a network is installed. Without CNI, the coredns pods are pending and podSubnet is not specified in the cluster configuration.

- 1-1. Install with manifest

# Check Pod Subnet is missing

kubectl get configmap -n kube-system kubeadm-config -o yaml

...

networking:

dnsDomain: cluster.local

#podSubnet: 10.100.0.0/16

serviceSubnet: 10.96.0.0/12

scheduler: {}

...

curl -O https://raw.githubusercontent.com/projectcalico/calico/v3.28.1/manifests/calico.yaml

vim calico.yaml

# Match POD_CIDR and CALICO_IPV4POOL_CIDR

kind: DaemonSet

apiVersion: apps/v1

metadata:

name: calico-node

namespace: kube-system

labels:

k8s-app: calico-node

spec:

template:

spec:

containers:

- name: calico-node

env:

# The default IPv4 pool to create on startup if none exists. Pod IPs will be

# chosen from this range. Changing this value after installation will have

# no effect. This should fall within `--cluster-cidr`.

- name: CALICO_IPV4POOL_CIDR

value: "10.100.0.0/16"

---

kubectl apply -f calico.yaml

# Verify Calico installation in your cluster.

kubectl get pods -nkube-system

NAME READY STATUS RESTARTS AGE

calico-kube-controllers-77d59654f4-59qlx 1/1 Running 0 21s

calico-node-mn2kl 1/1 Running 0 21s

calico-node-sndg4 1/1 Running 0 21s

calico-node-wmwpm 1/1 Running 0 21s

- 1-2. Install with Calico Operator - tigera-operator

# Install the operator on your cluster.

kubectl create -f https://raw.githubusercontent.com/projectcalico/calico/v3.28.1/manifests/tigera-operator.yaml

custom-resources.yaml

# This section includes base Calico installation configuration.

# For more information, see: https://docs.tigera.io/calico/latest/reference/installation/api#operator.tigera.io/v1.Installation

apiVersion: operator.tigera.io/v1

kind: Installation

metadata:

name: default

spec:

# Configures Calico networking.

calicoNetwork:

ipPools:

- name: default-ipv4-ippool

blockSize: 26

cidr: 10.100.0.0/16

encapsulation: VXLANCrossSubnet

natOutgoing: Enabled

nodeSelector: all()

---

# This section configures the Calico API server.

# For more information, see: https://docs.tigera.io/calico/latest/reference/installation/api#operator.tigera.io/v1.APIServer

apiVersion: operator.tigera.io/v1

kind: APIServer

metadata:

name: default

spec: {}

- Custom Resource

curl https://raw.githubusercontent.com/projectcalico/calico/v3.28.1/manifests/custom-resources.yaml -O

kubectl create -f custom-resources.yaml

# Verify Calico installation in your cluster.

watch kubectl get pods -n calico-system

NAME READY STATUS RESTARTS AGE

calico-kube-controllers-5857c9dccf-f6bh2 1/1 Running 0 6m43s

calico-node-l65nq 1/1 Running 0 6m43s

calico-node-wtftp 1/1 Running 0 6m43s

calico-node-xj2kn 1/1 Running 0 6m43s

calico-typha-68cbb57dc7-752ch 1/1 Running 0 6m43s

calico-typha-68cbb57dc7-b49rn 1/1 Running 0 6m40s

csi-node-driver-5pt8r 2/2 Running 0 6m43s

csi-node-driver-gqps8 2/2 Running 0 6m43s

csi-node-driver-lkthq 2/2 Running 0 6m43s

- To determine the pod network CIDR, you can inspect the configuration of the CNI plugin installed in your cluster.

- Here's how you can check for some common CNI plugins:

# 1. `calico` CNI plugin

kubectl get ippools.crd.projectcalico.org -o yaml

apiVersion: v1

items:

- apiVersion: crd.projectcalico.org/v1

kind: IPPool

spec:

blockSize: 26

cidr: 10.100.0.0/16

Control your cluster from other machines

scp root@<control-plane-host>:/etc/kubernetes/admin.conf .

scp [email protected]:/home/mobb/.kube/config .

# `cluster` `context` `user` -> add this to existing `~/.kube/config`

# change names to 'pi' which can be identified by you...

kubectl --kubeconfig ./config get nodes

clusters:

- cluster: ...

contexts:

- context: ...

users:

- name: ...

kubectl config get-contexts

kubectl config use-context pi

kubectl get no

NAME STATUS ROLES AGE VERSION

master Ready control-plane 36m v1.30.3

worker1 Ready worker 33m v1.30.3

worker2 Ready worker 33m v1.30.3

kubectl top no

NAME CPU(cores) CPU% MEMORY(bytes) MEMORY%

master 137m 3% 846Mi 10%

worker1 56m 1% 528Mi 6%

worker2 68m 1% 501Mi 6%

kubectl top pod -nkube-system

NAME CPU(cores) MEMORY(bytes)

calico-kube-controllers-77d59654f4-59qlx 2m 12Mi

calico-node-mn2kl 25m 117Mi

calico-node-sndg4 27m 117Mi

calico-node-wmwpm 24m 117Mi

coredns-7db6d8ff4d-kvzq2 2m 13Mi

coredns-7db6d8ff4d-mqmj9 2m 13Mi

etcd-master 20m 44Mi

kube-apiserver-master 48m 261Mi

kube-controller-manager-master 12m 56Mi

kube-proxy-7st54 1m 13Mi

kube-proxy-gcb6c 1m 12Mi

kube-proxy-wt555 1m 13Mi

kube-scheduler-master 3m 18Mi

metrics-server-5df54c66b8-zhr7h 4m 18Mi

# Powershell

# An alias that's created or changed by Set-Alias isn't permanent and is only available during the current PowerShell session.

Set-Alias -Name k -Value kubectl

k get pod

Metrics server ¶

curl -L https://github.com/kubernetes-sigs/metrics-server/releases/latest/download/components.yaml -o metrics-server.yaml

vim metrics-server.yaml

- args:

- --kubelet-insecure-tls=true

kubectl apply -f metrics-server.yaml

k get pod -nkube-system

NAME READY STATUS RESTARTS AGE

...

metrics-server-5df54c66b8-zhr7h 1/1 Running 0 35s

Nginx Ingress Controller ¶

- Method 1. Install with yaml manifest

curl -L https://raw.githubusercontent.com/kubernetes/ingress-nginx/controller-v1.11.1/deploy/static/provider/cloud/deploy.yaml -o ingress-nginx.yaml

kubectl apply -f ingress-nginx.yaml

- Method 2. Install

helm

curl -fsSL -o get_helm.sh https://raw.githubusercontent.com/helm/helm/main/scripts/get-helm-3

chmod 700 get_helm.sh

./get_helm.sh

- Install

Nginx Ingress Controller

helm upgrade --install ingress-nginx ingress-nginx \

--repo https://kubernetes.github.io/ingress-nginx \

--namespace ingress-nginx --create-namespace

kubectl get pods -ningress-nginx

kubectl get svc -ningress-nginx

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

ingress-nginx-controller LoadBalancer 10.102.120.211 <pending> 80:31362/TCP,443:32684/TCP 52s

ingress-nginx-controller-admission ClusterIP 10.99.130.218 <none> 443/TCP 52s

Metallb ¶

- A pure software solution for Nginx ingress controller: MetalLB

-

Preparation

- If you're using kube-proxy in IPVS mode, since Kubernetes v1.14.2 you have to enable strict ARP mode.

# kubectl edit configmap -n kube-system kube-proxy

# OR

# see what changes would be made, returns nonzero returncode if different

kubectl get configmap kube-proxy -n kube-system -o yaml | \

sed -e "s/strictARP: false/strictARP: true/" | \

kubectl diff -f - -n kube-system

# actually apply the changes, returns nonzero returncode on errors only

kubectl get configmap kube-proxy -n kube-system -o yaml | \

sed -e "s/strictARP: false/strictARP: true/" | \

kubectl apply -f - -n kube-system

- Installation by manifest

kubectl apply -f https://raw.githubusercontent.com/metallb/metallb/v0.14.8/config/manifests/metallb-native.yaml

kubectl get pod -nmetallb-system

NAME READY STATUS RESTARTS AGE

controller-6dd967fdc7-xwmnn 1/1 Running 0 40s

speaker-876fv 1/1 Running 0 40s

speaker-s6xzp 1/1 Running 0 40s

speaker-wwtrm 1/1 Running 0 40s

- Configuration

- https://metallb.universe.tf/configuration/

k get no

NAME STATUS ROLES AGE VERSION

master Ready control-plane 41m v1.30.3

worker1 Ready worker 38m v1.30.3

worker2 Ready worker 38m v1.30.3

k get svc -ningress-nginx

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

ingress-nginx-controller LoadBalancer 10.102.120.211 <pending> 80:31362/TCP,443:32684/TCP 26m

ingress-nginx-controller-admission ClusterIP 10.99.130.218 <none> 443/TCP 26m

k get ingress

NAME CLASS HOSTS ADDRESS PORTS AGE

fe-nginx-ingress nginx localhost 80 81m

# NOTE: Setting no IPAddressPool selector in an L2Advertisement instance is interpreted

# as that instance being associated to all the IPAddressPools available.

# DO NOT OVERLAP with POD_CIDR (10.100.0.0/16), and NODE_IPs (192.168.0.10 ~ 12) (master and worker nodes)

cat << EOF > metallb.yaml

---

apiVersion: metallb.io/v1beta1

kind: IPAddressPool

metadata:

name: first-pool

namespace: metallb-system

spec:

addresses:

- 192.168.0.201-192.168.0.220

autoAssign: true

---

apiVersion: metallb.io/v1beta1

kind: L2Advertisement

metadata:

name: default

namespace: metallb-system

spec:

ipAddressPools:

- first-pool

EOF

kubectl apply -f metallb.yaml

kubectl get svc -ningress-nginx

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

ingress-nginx-controller LoadBalancer 10.102.120.211 192.168.0.201 80:31362/TCP,443:32684/TCP 164m

ingress-nginx-controller-admission ClusterIP 10.99.130.218 <none> 443/TCP 164m

- Edit Windows hosts File

- C:\Windows\System32\drivers\etc\hosts

192.168.0.201 catornot.org

curl -D- http://192.168.0.201 -H 'Host: catornot.org'

Application

helm install ...

kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

be-go-6b6f5fc88d-mxxbg 1/1 Running 0 10m 10.100.235.131 worker1 <none> <none>

be-py-6bc85fcb56-cn8cc 1/1 Running 0 10m 10.100.235.132 worker1 <none> <none>

be-py-6bc85fcb56-lb4rk 1/1 Running 0 10m 10.100.189.68 worker2 <none> <none>

fe-nginx-d7f6d6449-rzmll 1/1 Running 0 10m 10.100.189.67 worker2 <none> <none>

Let's Encrypt Certificate

- Prerequisite-1: NGINX Ingress Controller

- Prerequisite-2: Assign a DNS name

- Install cert-manager

- Issuer: staging

- Deploy a TLS Ingress Resource

- Issuer - Production

Prerequisite-1: NGINX Ingress Controller

Our previous ingress-nginx installation works with default HTTP protocol. However, I wanted to secure the connection to my application applying certificate issued by CA (Certificate Authority). One of the most popular CA as of July 2024 is Let's Encrypt.

In Kubernetes environment, cert-manager provide Cloud Native certificate management, which includes certificate auto-renewal!

Prerequisite-2: Assign a DNS name

!IMPORTATNT: The external IP that is allocated to the ingress-controller is the IP to which all incoming traffic should be routed. To enable this, add it to a DNS zone you control, for example as www.example.com. This quick-start assumes you know how to assign a DNS entry to an IP address and will do so.

Since I use metallb the nginx-ingress-controller ip is private ip(192.168.0.201). So First, I have to setup my router's port-forwarding so that my router will forward public ip request into the private ip(192.168.0.201)!

Install cert-manager

We need to deploy cert-manager to our Kubernetes cluster. We can use Helm or plain Kubernetes manifests to install cert-manager.

- use plain Kubernetes manifest

# Default static install

curl -L https://github.com/cert-manager/cert-manager/releases/download/v1.15.2/cert-manager.yaml -o cert-manager.yaml

kubectl apply -f cert-manager.yaml

# You should see cert-manager, cert-manager-cainjector, and cert-manager-webhook

# The webhook might take a little longer to successfully provision than the others.

kubectl get pods -n cert-manager

- Check if the installtion of cert-manager is successful

cmctlis a CLI tool manage and configure cert-manager resources for Kubernetes

# isntall cmctl

OS=$(uname -s | tr A-Z a-z); ARCH=$(uname -m | sed 's/x86_64/amd64/' | sed 's/aarch64/arm64/');

curl -fsSL -o cmctl "https://github.com/cert-manager/cmctl/releases/latest/download/cmctl_${OS}_${ARCH}"

chmod +x cmctl

sudo mv cmctl /usr/local/bin

# or `sudo mv cmctl /usr/local/bin/kubectl-cert_manager` to use `kubectl cert-manager` instead.

# cmctl performs a dry-run certificate creation check against the Kubernetes cluster.

# If successful, the message The cert-manager API is ready is displayed.

# Check if the cert-manager API is ready

cmctl check api

The cert-manager API is ready

cmctl check api --wait=2m

The cert-manager API is ready

# End-to-end verify the installation

cat <<EOF > test-resources.yaml

apiVersion: v1

kind: Namespace

metadata:

name: cert-manager-test

---

apiVersion: cert-manager.io/v1

kind: Issuer

metadata:

name: test-selfsigned

namespace: cert-manager-test

spec:

selfSigned: {}

---

apiVersion: cert-manager.io/v1

kind: Certificate

metadata:

name: selfsigned-cert

namespace: cert-manager-test

spec:

dnsNames:

- example.com

secretName: selfsigned-cert-tls

issuerRef:

name: test-selfsigned

EOF

kubectl apply -f test-resources.yaml

kubectl describe certificate -n cert-manager-test

kubectl delete -f test-resources.yaml

What is ACME?

ACME is a protocol developed to automate the process of obtaining and managing SSL/TLS certificates. It standardizes the interactions between certificate authorities (CAs) and clients to streamline the process of proving domain ownership and issuing certificates.

What is Let's Encrypt?

Let's Encrypt is a nonprofit CA (certificate authority) that provides free SSL/TLS certificates to promote secure web communications. It uses the ACME protocol to automate the certificate management; the issuance and renewal of these certificates.

How ACME Works with Let's Encrypt?

Client Requests Certificate → Domain Validation → Issuance → Renewal

- A client (e.g., a web server or application) uses an ACME client (e.g. certbot) to request a certificate from Let's Encrypt.

- The ACME client and Let's Encrypt engage in a process to validate domain ownership through ACME challenges to prove that the client controls the domain.

- Once domain ownership is validated, Let's Encrypt issues the certificate and the client installs it.

- The client periodically renews the certificate through the ACME protocol, ensuring continued validity and security.

Issuer: staging

cert-manager mainly uses two different custom Kubernetes resources - known as CRDs - to configure and control how it operates, as well as to store state.

These resources are Issuers and Certificates.

An Issuer defines how cert-manager will request TLS certificates.

Issuers are specific to a single namespace in Kubernetes, but there's also a ClusterIssuer which is meant to be a cluster-wide version. ClusterIssuer resources apply across all Ingress resources in your cluster. If you are using a ClusterIssuer, remember to update the Ingress annotation cert-manager.io/issuer to cert-manager.io/cluster-issuer.

There are different types of Issuers. I will be using ACME Issuer. When you create a new ACME Issuer, cert-manager will generate a private key which is used to identify you with the ACME server.

BEWARE: Let's Encrypt server has a very strict rate limit (check with staging, then do production)

# Edit as your email address

kubectl create --edit -f https://raw.githubusercontent.com/cert-manager/website/master/content/docs/tutorials/acme/example/staging-issuer.yaml

# expected output: issuer.cert-manager.io "letsencrypt-staging" created

apiVersion: cert-manager.io/v1

# kind: Issuer

kind: ClusterIssuer

metadata:

name: letsencrypt-staging

spec:

acme:

# Email address used for ACME registration

# You must replace this email address with your own.

# Let's Encrypt will use this to contact you about expiring

# certificates, and issues related to your account.

email: [email protected]

# The ACME server URL

server: https://acme-staging-v02.api.letsencrypt.org/directory

# Name of a secret used to store the ACME account private key

privateKeySecretRef:

# Secret resource that will be used to store the account's private key.

name: letsencrypt-staging

# Enable the HTTP-01 challenge provider

# Add a single challenge solver, HTTP01 using nginx

solvers:

- http01:

ingress:

ingressClassName: nginx

# You should see the issuer listed with a registered account.

k get clusterissuer

NAME READY AGE

letsencrypt-staging True 11s

k describe clusterissuer letsencrypt-staging

# PRODUCTION ClusterIssuer

kubectl create --edit -f https://raw.githubusercontent.com/cert-manager/website/master/content/docs/tutorials/acme/example/production-issuer.yaml

apiVersion: cert-manager.io/v1

# kind: Issuer

kind: ClusterIssuer

metadata:

name: letsencrypt-prod

spec:

acme:

# The ACME server URL

server: https://acme-v02.api.letsencrypt.org/directory

# Email address used for ACME registration

email: [email protected]

# Name of a secret used to store the ACME account private key

privateKeySecretRef:

name: letsencrypt-prod

# Enable the HTTP-01 challenge provider

solvers:

- http01:

ingress:

ingressClassName: nginx

k get clusterissuer

NAME READY AGE

letsencrypt-prod True 4s

letsencrypt-staging True 109s

k describe clusterissuer letsencrypt-prod

Deploy a TLS Ingress Resource

- To request the TLS certificate, edit ingress resource to add the

annotationon the ingress with ingress-shim. - (Another method) or directly create a cretificate resource

vim ./cat-chart/values.pi.yaml

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: catornot-ingress

annotations:

# cert-manager.io/issuer: "letsencrypt-staging"

cert-manager.io/cluster-issuer: "letsencrypt-staging"

# ...

# Argo CD: OutOfSync -> deploy to cluster!

- Cert-manager will read these annotations and use them to create a certificate, which you can request and see:

# Cert-manager will read these annotations and use them to CREATE a CERTIFICATE, which you can request and see:

k get certificate -w

NAME READY SECRET AGE

catornot-tls False catornot-tls 14s

# cert-manager reflects the state of the process for every request in the certificate object

kubectl describe certificate catornot-tls

- Once complete, cert-manager will have created a secret with the details of the certificate based on the secret used in the ingress resource. You can use the describe command as well to see some details:

k get secret

NAME TYPE DATA AGE

catornot-tls-xq4k4 Opaque 1 77s

kubectl describe secret catornot-tls-xq4k4

Issuer - Production

Now that we have confidence that everything is configured correctly, you can update the annotations in the ingress to specify the production issuer:

vim ./cat-chart/values.pi.yaml

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: catornot-ingress

annotations:

# cert-manager.io/issuer: "letsencrypt-prod"

cert-manager.io/cluster-issuer: "letsencrypt-prod"

# ...

# Argo CD: OutOfSync -> deploy to cluster!

# You will also need to DELETE the existing secret, which cert-manager is watching and will cause it to reprocess the request with the updated issuer.

kubectl delete secret quickstart-example-tls

# Cert-manager will read these annotations and use them to create a certificate, which you can request and see:

kubectl get certificate

# ---> This will start the process to get a new certificate, and using describe you can see the status. Once the production certificate has been updated, you should see the example KUARD running at your domain with a signed TLS certificate.

# cert-manager reflects the state of the process for every request in the certificate object

kubectl describe certificate quickstart-example-tls

# Once complete, cert-manager will have created a secret with the details of the certificate

# based on the secret used in the ingress resource. You can use the describe command as well to see some details:

kubectl describe secret quickstart-example-tls

# ---> You can see the current state of the ACME Order by running kubectl describe on the Order resource that cert-manager has created for your Certificate:

kubectl describe order quickstart-example-tls-889745041

...

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Created 90s cert-manager Created Challenge resource "quickstart-example-tls-889745041-0" for domain "www.example.com"

# Here, we can see that cert-manager has created 1 'Challenge' resource to fulfill the Order. You can dig into the state of the current ACME challenge by running kubectl describe on the automatically created Challenge resource:

kubectl describe challenge quickstart-example-tls-889745041-0

# From above, we can see that the challenge has been 'presented' and cert-manager is waiting for the challenge record to propagate to the ingress controller. You should keep an eye out for new events on the challenge resource, as a 'success' event should be printed after a minute or so (depending on how fast your ingress controller is at updating rules):

kubectl describe challenge quickstart-example-tls-889745041-0

...

Status:

Presented: false

Processing: false

Reason: Successfully authorized domain

State: valid

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Started 71s cert-manager Challenge scheduled for processing

Normal Presented 70s cert-manager Presented challenge using http-01 challenge mechanism

Normal DomainVerified 2s cert-manager Domain "www.example.com" verified with "http-01" validation

Docker Registry ¶

Local Registry

Set up local docker registry that Kubernetes Pods will pull images from. I used worker1 node to set up a temporary docker registry. I will set-up another raspberry-pi machine in the future.

- Self-signed Certificate

mkdir -p certs

# Generate SSL Certificates:

openssl req -newkey rsa:4096 -nodes -sha256 -x509 \

-keyout certs/domain.key \

-days 365 \

-out certs/domain.crt \

-subj "/CN=192.168.0.11"

# Run the Registry Container with HTTPS:

docker run -d -p 5000:5000 \

--restart=always \

--name registry \

-v $(pwd)/certs:/certs \

-e REGISTRY_HTTP_ADDR=0.0.0.0:5000 \

-e REGISTRY_HTTP_TLS_CERTIFICATE=/certs/domain.crt \

-e REGISTRY_HTTP_TLS_KEY=/certs/domain.key \

registry:2

# Distribute the CA Certificate:

sudo mkdir -p /etc/docker/certs.d/192.168.0.11:5000

sudo cp certs/domain.crt /etc/docker/certs.d/192.168.0.11:5000/ca.crt

docker tag your-image 192.168.0.11:5000/your-image

docker push 192.168.0.11:5000/your-image

- Configure Containerd to Use the Secure Registry

# Edit the containerd Configuration:

sudo vim /etc/containerd/config.toml

[plugins."io.containerd.grpc.v1.cri".registry]

[plugins."io.containerd.grpc.v1.cri".registry.mirrors]

[plugins."io.containerd.grpc.v1.cri".registry.mirrors."192.168.0.10:5000"]

endpoint = ["https://192.168.0.10:5000"]

[plugins."io.containerd.grpc.v1.cri".registry.configs]

[plugins."io.containerd.grpc.v1.cri".registry.configs."192.168.0.10:5000".tls]

ca_file = "/etc/docker/certs.d/192.168.0.10:5000/ca.crt"

sudo systemctl restart containerd

Dockerhub

- Authenticate to dockerhub to increase image pull rate limit.

- increase-rate-limits

sudo vim /etc/containerd/config.toml

[plugins."io.containerd.grpc.v1.cri".registry.configs]

[plugins."io.containerd.grpc.v1.cri".registry.configs."registry-1.docker.io".auth]

username = "jnuho"

password = "******"

[plugins."io.containerd.grpc.v1.cri".registry.mirrors]

[plugins."io.containerd.grpc.v1.cri".registry.mirrors."registry-1.docker.io"]

endpoint = ["https://registry-1.docker.io"]

sudo systemctl restart containerd

Argo CD ¶

"Argo CD is implemented as a Kubernetes controller which continuously monitors running applications and compares the current, live state against the desired target state (as specified in the Git repo)"